How to Setup the Perfect Robots.txt File

How to Setup the Perfect Robots.txt File

Last Updated / Reviewed : January 5th, 2025

Execution Time : 30 mins

Goal : To properly create or optimize your robots.txt file.

Ideal Outcome : You have an excellent robots.txt on your website that allows search engines to index your website exactly as you want them to.

Pre-requisites or requirements :

■ You need to have access to the Google Search Console property of the website you are working on. If you don’t have a Google Search Console property setup yet you can do so by following this SOP.

Why this is important : Your robots.txt sets the fundamental rules that most search engines will read and follow, once they start crawling your website. They tell search engines which parts of your website you don’t want (or they don’t need) to crawl.

Where this is done : In a text editor, and in Google Search Console. If you are using WordPress, in your WordPress Admin Panel as well.

When this is done : Typically you would audit your robots.txt at least every 6 months to make sure it is still current. You should have a proper robots.txt whenever you start a new website.

Who does this : The person responsible for SEO in your organization.

Auditing your current Robots.txt

1. Open your current robots.txt in your browser.

a. Note : You can find your robots.txt by going to ‘http://yourdomain.com/robots.txt’. Replace “http://yourdomain.com” with your actual domain name.

i. Example : http://asiteaboutemojis.com/robots.txt

b. Note 2 : If you can’t find your robots.txt it could be the case that there is no robots.txt in that case jump to the “Creating a Robots.txt” chapter of this SOP.

2. Tick the following cheat-sheet and fix each issue if it exists:

🗹 Your robots.txt has been validated by Google Search Console’s robots.txt tester.

i. Note: If you are not sure on how to use the tool, you can follow the last chapter of this SOP, and then get back to the current chapter.

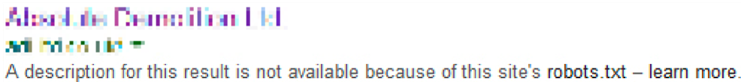

🗹 Do not try to remove pages from Google Search Results by using the robots.txt only.

i. Example : If you don’t want “http://asiteaboutemojis.com/passwords” to appear in the search results, blocking “/passwords” will not result on that page being removed from Google. Your results will likely remain indexed and this message will be displayed instead:

🗹 Your robots.txt is disallowing unimportant system pages from being crawled

i. Example : default pages, server logs, etc

🗹 You are disallowing sensitive data from being crawled.

i. Example: Internal documents, Customer’s Data, etc.

ii. Remember: Blocking access in robots.txt is not enough to avoid those pages from being indexed. In the case of sensitive data, not only should those pages/files be removed from search engines but they should be password protected. Attackers frequently read the robots.txt file to find confidential information and if there is no other protection in place nothing stops them from stealing your data.

🗹 You are not disallowing important scripts that are necessary to render your pages correctly.

i. Example : Do not block Javascript files that are necessary to render your content correctly.

3. To make sure you didn’t miss any page after this checklist, perform the following test:

i. Open Google on your browser (the version relevant for the country that you are targeting, for instance google.com).

ii. Google “Site:yourdomain.com” (replace yourdomain.com with your real domain name)

1. Example :

iii. You will be able to see the search results for that domain that are indexed by Google. Glance through as much of them as you can to find results that do not meet the guidelines of the checklist.

Creating a Robots.txt

1. Create a .txt document on your computer.

2. Here are two templates you can copy straight away if they fit what you are looking for:

a. Disallowing all crawlers from crawling your entire website:

Important : This will block Google from crawling your entire website. This can severely damage your search engine rankings. Only in very rare circumstances do you

want to do this. For instance if it is a staging website, or if the website is not meant for the public.

Note : Don’t forget to replace “yoursitemapname.xml” with your actual sitemap URL.

Note 2 : If you don’t have a Sitemap yet you can follow SOP 055

User-agent: *

Disallow: /

Sitemap: http://yourdomain.com/yoursitemapname.xml

b. Allowing all crawlers to crawl your entire website:

Note : It’s ok to have this robots.txt, even though it won’t change the way search engines crawl your website, since by default they assume they can crawl everything that is not blocked by a “Disallow” rule.

Note 2 : Don’t forget to replace “yoursitemapname.xml” with your actual sitemap URL.

User-agent: *

Allow: /

Sitemap: http://yourdomain.com/yoursitemapname.xml

3. Typically those two do not fit your case. You will usually want to allow all robots, but you only want them to access specific paths of your website. If that is the case:

To block a specific path (and respective sub-paths) :

i. Start by adding the following line to it:

User-agent: *

ii. Block paths, subpaths, or filetypes that you don’t want crawlers to access by having an extra line:

a. For paths : Disallow: /your-path

■ Note : Replace “your-path” with the path that you want to block. Remember that all sub-paths bellow that path will be blocked as well.

■ Example : “Disallow: /emojis” will disallow crawlers from accessing “asiteaboutemojis.com/emojis” but will also disallow them from accessing “asiteboutemojis.com/emojis/red”

b. For filetypes : Disallow: /*.filetype$

■ Note : Replace “filetype” with the filetype that you want to block.

■ Example : “Disallow: /*.pdf$ will disallow crawlers from accessing “asiteaboutemojis.com/emoji-list.pdf”

To block specific crawlers from crawling your website

i. Add a new line at the end of your current robots.txt:

Note : Don’t forget to replace “Emoji Crawler” with the actual crawler you want to block. You can find a list of active crawlers here and see if you want to block any of them specifically. If you don’t know what this is you can skip this step.

a. User-agent: Emoji Crawler

b. Disallow: /

Example : To block Google Images’ crawler :

a. User-agent: Googlebot-Image

b. Disallow: /

Adding the Robots.txt to your website

This SOP covers two solutions for adding a robots.txt to your website:

● If you are using WordPress on your website and running the Yoast SEO Plugin click here.

○ Note : If you don’t have Yoast installed on your WordPress website you can do it by following that specific chapter on SOP 008 by clicking here.

○ Note 2 : If you don’t want to install Yoast on your website, the procedure below (using FTP or SFTP) will also work for you.

● If you are using another platform, you can upload your robots.txt through FTP or SFTP. This procedure is not covered by this SOP. If you don’t know or don’t have access to upload files to your server you can ask your web-developer or the company that developer your website to do so. Here is a template you can use to send over email:

“Hi,

I’ve created a robots.txt to improve the SEO of the website. You can find the file attached to this email. I need it to be placed in the root of the domain and it should be named “robots.txt”.

You can find here the technical specifications for this file in case you need any more information: https://developers.google.com/search/reference/robots_txt

Thank you.“

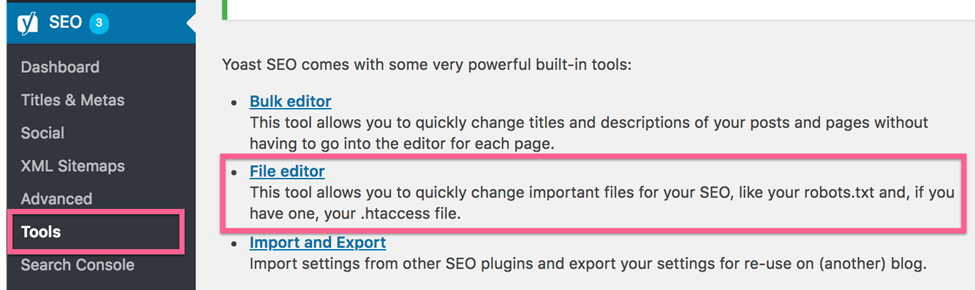

Adding your robots.txt through the Yoast SEO plugin :

1. Open your WordPress Admin.

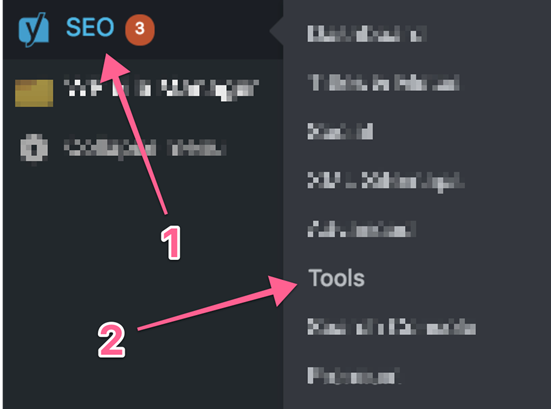

2. Click “SEO” → “Tools”.

Note : If you don’t see this option, you will need to enable “Advanced features” first:

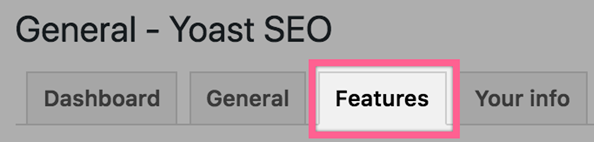

1. Click “SEO” → “Dashboard” → “Features”

2. Click “SEO” → “Dashboard” → “Features”

3. Click “Save changes”

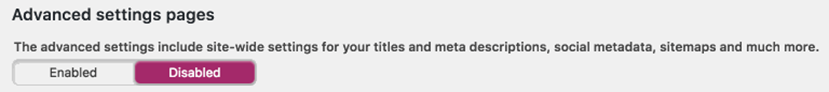

3. Click “File Editor”:

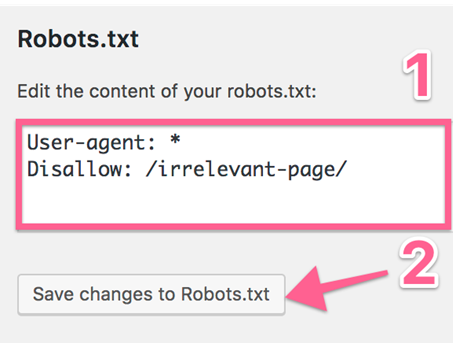

4. You will see an input text box where you can add or edit the text of your robots.txt file → Paste the content of the robots.txt file you have just created → Click “Save Changes to Robots.txt”.

5. To make sure everything went perfectly, on your browser open: “http://yoursite.com/robots.txt”.

a. Note : Don’t forget to replace “http://yoursite.com” with your domain name.

i. Example : http://asiteaboutemojis.com/robots.txt

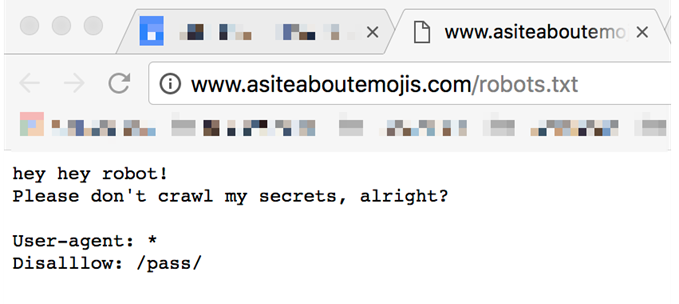

6. You should be able to see your robots.txt live on your website:

a. Example : http://www.asiteaboutemojis.com/robots.txt

Validating your Robots.txt

1. Open Google Search Console’s Robot.txt tester.

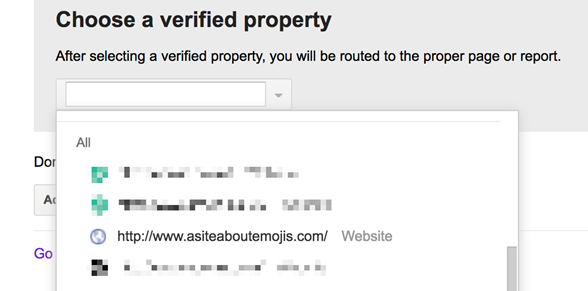

2. If you have multiple properties in that Google Account select the right one:

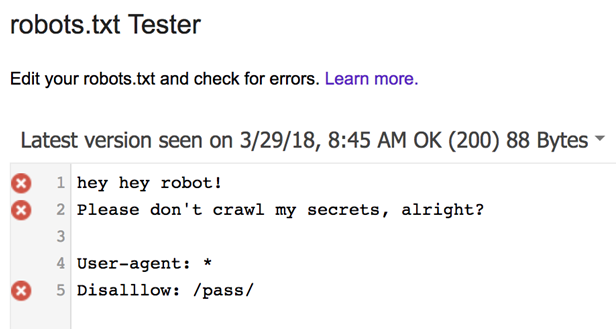

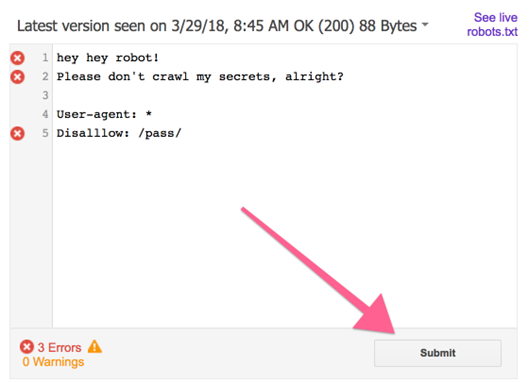

3. You will be sent to the robots.txt Tester tool where you will see the last robots.txt file that Google crawled.

4. Open your actual robots.txt and make sure the version that you are seeing in Google Search Console’s tool is exactly the same as the current version you have live on your domain.

a. Note : You can find your robots.txt by going to ‘http://yourdomain.com/robots.txt’. Replace “http://yourdomain.com” with your actual domain name.

i. Example : http://asiteaboutemojis.com/robots.txt

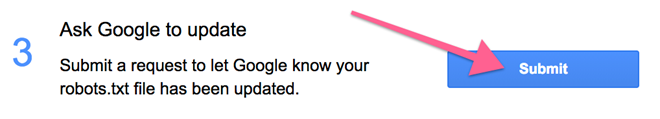

b. Note : If that is not the case, and you’re seeing an outdated version on GSC (Google Search Console):

i. click “Submit” in the bottom right corner:

ii. Click “Submit” again

iii. Refresh the page by pressing Ctrl+F5 (PC) or Cmd ⌘+R (Mac)

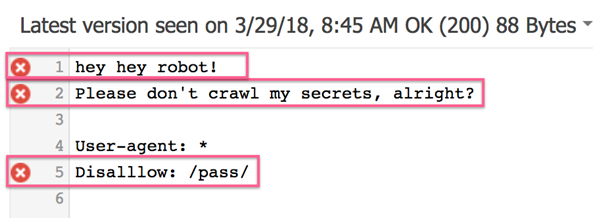

5. If there are no syntax errors or logic warnings on your file you will see this message:

Note : If there are errors or warnings they will be displayed on the same spot

![]()

Note 2 : You will also see symbols next to each of the lines that are generating those errors:

If that is the case make sure you followed the guidelines laid out on this SOP or if your file is following Google’s guidelines here.

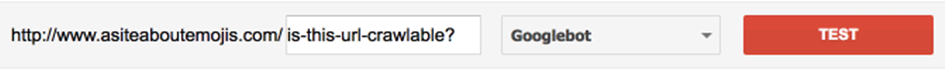

6. If no issues were found on your robots.txt. Test your URLs by adding them to the text input box and clicking “Test”:

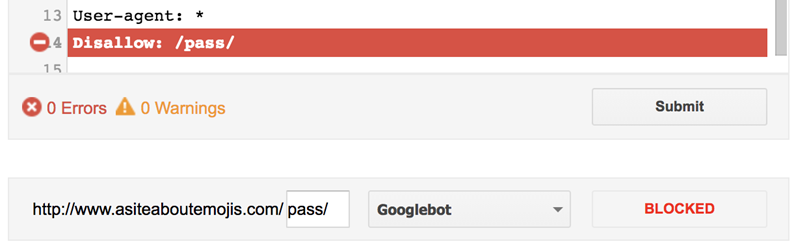

Note : If your URL is being blocked by your robots.txt you will see a “Blocked” message instead, and the tool will highlight the line that is blocking that URL: